AI technology development and privacy protection:Balancing the challenges of innovation and security

_ _

People's Political Consultative Conference Network

_Second time_

It is difficult for us to arrive at the scene immediately, but we will speak out authoritatively the second time. The second time, I will be with you.

△ 本报记者 齐波 摄 4月23日,北京互联网法院一审开庭宣判全国首例AI生成声音人格权侵权案,明确认定在具备可识别性的前提下,自然人声音权益的保护范围可及于AI生成声音。此案提示我们,在数字时代,AI技术飞速发展,为我们的生活带来前所未有的便利。然而,“AI换脸”诈骗、AI生成声音侵犯他人声音权益等案件不断出现,关于AI的规范使用和管理引发社会各界广泛关注。

△ 本报记者 齐波 摄 4月23日,北京互联网法院一审开庭宣判全国首例AI生成声音人格权侵权案,明确认定在具备可识别性的前提下,自然人声音权益的保护范围可及于AI生成声音。此案提示我们,在数字时代,AI技术飞速发展,为我们的生活带来前所未有的便利。然而,“AI换脸”诈骗、AI生成声音侵犯他人声音权益等案件不断出现,关于AI的规范使用和管理引发社会各界广泛关注。

Be careful of being "stolen" and "stolen"!

"Hello, please look at the camera here and 'brush your face' to check in!" In recent years, with the rapid development of information technology, especially AI technology, Face Recognition has gradually penetrated into all aspects of people's lives.

Tan Jianfeng, member of the 13th National Committee of the Chinese People's Political Consultative Conference and president of the Fifth Academy of Space Information Technology, said that biometric data such as "faces" is unique and unchangeable, and belongs to important personal privacy data. "Currently, personal privacy data is subject to problems such as indiscriminate exploitation, overuse, and even fraudulent use and unfair application. At the same time, there are risks related to data processing and storage. In addition, the technology itself also has certain security loopholes, which are easy to attack and crack." Tan Jianfeng introduced that in response to the risks of "face-brushing" technology, countries mainly respond to them through legislative supervision and industry self-discipline."Both foreign and domestic countries emphasize the need to obtain personal consent when collecting 'face data'. However, in practice, due to differences in knowledge levels, the 'right to know' is easily ignored, and services are not provided without giving 'face'. The' informed consent principle 'as one of the legislative foundations for the protection of personal information becomes virtually useless." In this regard, Wu Jiezhuang, member of the National Committee of the Chinese People's Political Consultative Conference and Chairman of the Board of Directors of Gaofeng Group, also pointed out that using Face Recognition technology to process facial information requires a specific purpose and sufficient necessity, and must also be authorized and agreed by individuals. At the same time, the impact on personal rights and interests after use and possible risks should also be objectively evaluated. "Therefore, in order to manage personal privacy data well, in addition to effectively controlling technical security, we must also improve relevant laws and regulations, and start to build a risk management system in terms of strengthening the formulation of technical standards and improving the level of supervision, so that the application of technological innovation and personal information can be balanced." Wu Jiezhuang suggested.

As the threshold of "AI+" becomes lower and the scope of application becomes wider, now you can "clone" a person's voice as long as you extract enough voice samples. This also makes people start to worry, has their voices been AI? How to protect your own voice rights and interests? Tan Jianfeng said that since China's Civil Code first included the right of natural persons to refer to their voices as objects of protection, the trial of this "voice theft" case has attracted widespread attention from society. In the end, the court used infringement of personality rights and interests to provide judicial protection, which is very typical. significance, demonstrating the attempt to apply the law to the application of new technologies. "But we should also note that in practice, such cases usually face the problem of 'difficulty in safeguarding rights', and there are problems such as 'difficulty in evidence',' difficulty in evidence', and 'difficulty in identity', which can be further resolved in the handling of subsequent related cases." Tan Jianfeng also added that voice, like facial data, has person-specific characteristics. At the same time, voiceprint is also an important biometric data that can be used for identification, so it is also personally sensitive data. "Therefore, this case may not only infringe on personal rights and interests, but also apply to the scope of personal information protection."

Use technical means to deal with AI technical risks

随着人工智能技术蓬勃发展,大语言模型(LLM)和扩散模型等AI模型不断取得突破,展现出在各行业变革的巨大潜力。随之而来,“AI复活”、“数字永生”、ChatGPT、Sora等技术被广泛热议。

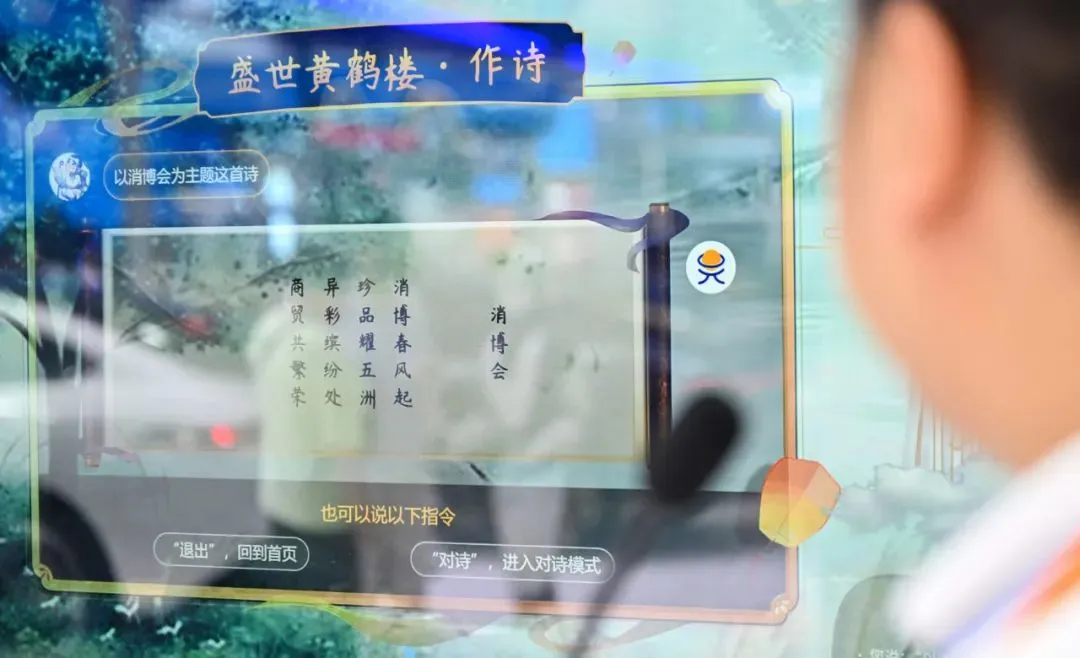

△ On April 15, audiences watched AI compose poems at the Consumer Expo. Photo by Xinhua Agency reporter Guo Cheng

"Large models have tool attributes and can become helpers of good people or accomplices of bad people." In the view of Zhou Hongyi, member of the National Committee of the Chinese People's Political Consultative Conference and founder of the 360 Group, to deal with the possible risks brought by AI applications, in addition to strengthening supervision, appropriate technical means must also be used. "For example, how to add an unchangeable and irreplaceable internal watermark to the video generated by Sora, and then design a program to read the watermark. After query, you can know whether the video generated is a watermarked one." "Only by facing AI can we embrace AI." In Tan Jianfeng's view, at present, deep synthesis technology has been able to penetrate the network virtual space and the real physical world, integrate the avatar with the actual life scene, the application effect is intuitive and easy to see, and the commercialization path. "But this type of technology hides many technical risks, and it may also constitute infringement by using natural person images or related biometric data without authorization to create virtual characters. In addition, due to the existence of avatars or digital people, the boundaries between truth and falsehood of information are blurred, resulting in social cognitive dissonance. Currently, there are not only ethical disputes, but also many legal supervision blind spots." So, how to prevent AI technology from being abused? In this regard, Tan Jianfeng said that while accelerating the development of artificial intelligence, while doing a good job in systemic risk prevention and control can be implemented from both sides of supply and demand as well as supervision. For example, in terms of application requirements, reasonable user education and guidance should be carried out, and reasonable regulations and constraints should be carried out on the objects and purposes of use. Technology suppliers should pay attention to technical ethical issues. On the one hand, they must reach unified technical standards and specifications. On the other hand, relevant enterprises must also pay attention to self-discipline to ensure that there is a bottom line for technology application. In terms of legal supervision, improve the accuracy, transparency and stability of prevention and control measures. Talking about the supervision and governance of artificial intelligence, Du Lan, member of the Guangdong Province CPPCC and president of the Guangdong Province Artificial Intelligence Industry Association, mentioned three forces-the power of system, the power of industry, and the power of technology. "First of all, on the basis of accurately defining various issues, the responsible subjects will be clarified so that the system will take effect. Secondly, to effectively balance the relationship between the development and governance of the artificial intelligence industry, technology companies should actively regard AI security and ethical governance capabilities as part of their competitiveness and as a driving force for high-quality and sustainable development of enterprises." Duran further stated that a large number of security and ethical issues must be solved through technical means. First, technicians need to fully consider security and ethical issues when developing technology; secondly, they must develop defense tools specifically for cybercrimes using artificial intelligence.

AI development needs to pay more attention to safety and ethics

"Currently, many large model technologies mainly rely on big data feeding, so the biggest risk point lies in data management and control. "Tan Jianfeng said.

How to avoid it? Tan Jianfeng said that reasonable regulatory measures need to be formulated to strengthen the management of related applications. First, ensure that the algorithm is transparent and interpretable; second, strengthen data security management. This not only requires ensuring the legal compliance of data sources, updating data in a timely manner and correcting non-compliant content, especially protecting input data and preventing data leakage. It is also necessary to protect intellectual property rights to ensure that the generated content does not infringe. It is also necessary to draw red lines on the output content, conduct strict supervision and review, and comply with applicable legal provisions. "Although the country has successively promulgated the" CyberSecurity Law of the People's Republic of China "and" Measures for the Management of Generative Artificial Intelligence Services ", etc., in the face of constantly 'renovated' AI technology, corresponding supporting measures should also be updated in a timely manner." Tan Jianfeng suggested that, first, the governance goal should shift from a single product management and control thinking to a technical system regulation thinking. In addition, risk prevention should be appropriately moved forward. The application of regulatory technology should be managed at the source at the front-end technology research and development project establishment stage. The second is to properly integrate regulatory means. For the development of generative artificial intelligence technology, it is not only necessary to make corresponding adjustments in various sub-fields, but also to integrate the system well, make good use of the joint force of technology and law, and improve artificial intelligence with high applicability. Technical legal system.

Development and governance "two-pronged"

"While promoting the innovative development of artificial intelligence, we must also pay attention to its governance. These two wheels need to be advanced at the same time. In Zhou Hongyi's view, achieving "reliable, good, credible and controllable" development of the large model will become the key to China's ability to enhance its influence and competitiveness in cyberspace.

How to achieve this goal? Zhou Hongyi suggested:First, relevant departments use methods such as unveiling the list and taking charge to encourage and support enterprises with both "security and AI" capabilities to better play their important role in solving security problems of general large-scale models. Second, the state studies and formulates a standard system to ensure the security of general large models, promotes general large models to carry out security evaluations and access security services, and reduces the security risks of general large models. Third, the government, central state-owned enterprises and enterprises with both "security and AI" capabilities have carried out in-depth cooperation in the field of large-scale model security to give full play to the advantageous role of such enterprises in the field of artificial intelligence security. "Effective artificial intelligence governance is inseparable from the participation and support of all parties. It is necessary to establish a scientific supervision system that integrates the upper and lower levels to make regulatory policies more targeted and effective; it is necessary to encourage all types of innovation entities to actively participate in the artificial intelligence governance process. On the one hand, the identification and evaluation of artificial intelligence risks are unified and transparent. On the other hand, technical standards, market access, data supervision and other means are strengthened to actively prevent technology application risks. In addition, strengthening international collaboration is also important." Tan Jianfeng suggested. In Tan Jianfeng's view, for AI governance, we should follow the development laws of the industry, grasp the essential characteristics of AI technology, and draw six lines for the future-research must have a "line of sight" and must be brave in innovation and breakthroughs; manufacturers must have a "bottom line", there must be "boundaries" for application, and rules for technology application; supervision must have a "high-voltage line", and active actions must be taken against security threats; development must have a "contour line" and balance the interests of multiple parties; Governance must have "parallel lines" to take care of a wide range of social groups. Several CPPCC members said in interviews that too strict supervision of AI may inhibit innovation, and it is particularly important to find a balance between strong supervision and industry innovation. So, how to build a regulatory mechanism that can adapt to the changing characteristics of AI and meet the needs of social development and governance? Committee members generally believe that regulatory measures need to be strict. "In fact, when the Internet was first established, China had already proposed the principle of 'inclusiveness and prudence', which generally encourages innovation but also considers risk regulation. This principle has been relatively effective for a long time." Wu Jiezhuang said. "I believe that in the intelligent network stage, supervision will be able to lead technological innovation. From the perspective of supervision, adhering to the principle of prudent intervention, adhering to the bottom line of law, maintaining the bottom line of personal safety, and implementing scientific and reasonable appropriate supervision should be able to adapt to the development of intelligent technology." Tan Jianfeng said.

reporter:Zhou Jiajia and Xu Kanghui

text editing:Li Chenyang New Media Editor:Ma Xinyu (Internship) Review:Li Muyuan

The label cannot be clicked during preview